What happened to April?!? I swear we were only a couple of weeks into it, and suddenly it’s over. I guess the same thing could be said about the first third of this year – it seems like a lot has happened in the world a very short period of time.

Let me catch a quick breath and tell you what I’ve been up to lately:

- featured article: “Latency” isn’t just an issue in digital audio workstations; each digital module in your system could be introducing delays.

- Alias Zone updates: A couple of tracks sent off for mastering; a new compilation for a good cause.

- Learning Modular updates: My latest music video – The Cave – is now also available on the Learning Modular YouTube channel.

- Patreon updates: Two detailed posts on my new performance system, covering how I generate trigger patterns and notes used in both the modular and in the laptop – including how I coordinate the timing between the two. I also shared my experiences observing and working with Steve Roach.

- upcoming events: Live performances in May at Mountain Skies near Asheville, North Carolina, and in June at the Currents New Media Festival in Santa Fe, New Mexico.

- one more thing: Drones for Peace: A fundraiser for Ukraine that demonstrates “drone music” is far more varied than you might think.

Latency in Digital Modules

Quite often, I hear people who do not use modulars refer to them as “analog” synths, assuming that today’s modular synth movement is primarily about returning to earlier times. In reality, an increasing number of today’s modules have a digital component to them. Even something that doesn’t scream “digital!” like WMD’s Crater kick drum module combines both analog and digital sections to create its final sound.

I’m not going to argue about whether digital or analog sounds better; they each have their place, and digital modules can do things analog ones could only dream of. However, there is an issue with digital modules versus analog ones that very few talk about, and which does affect your music – and that’s how fast they respond to triggers for new notes and other control voltage changes.

The Problem

Analog modules respond to new triggers, changes in control voltage, and the such literally at the speed of electricity – which is very, very fast. Some analog components such as capacitors and vactrols can “slew” (slur) the incoming signal, slightly slowing down a modules’s response, but in most cases you’re not going to hear it.

The process is no where near as straightforward with digital modules. First, they need to scan their input jacks to see what the current voltage is at each of them. Some modules scan trigger and control inputs at a slower speed than audio inputs to save processing power (and therefore cost); depending on the module, this scanning can be as slow as once every few milliseconds.

Second, their internal code then needs to calculate if that voltage has changed (especially at trigger inputs) and what to do with those changes, including changing the current sound or triggering a new one. They might even add a small additional delay to the trigger, knowing that it can take the analog to digital conversion on the pitch CV input an extra millisecond or so to settle to its new value before they can correctly read its pitch. This total input to output delay can be under a millisecond; I have measured it to be as much as 17 milliseconds in computationally-intensive modules such as the Rossum Electro-Music Panharmonium Mutating Spectral Resynthesizer (more on that later).

Even larger delays can appear when there is a module – for example, a sequencer – in the middle that is converting clocks to gates and pitch control voltages. Again, this timing delay can be under a millisecond, to as much as 10 ms in the sequencers I use. This number often gets much larger if there is a computer plus MIDI and audio interfaces in the loop.

If you are using different sequencers in your system – for example, a trigger pattern generator for percussive sounds, a note sequencer for melodic sounds, and external hardware or a computer generating its own sounds, all in response to the same clock – each one of those could be introducing a different amount of delay in addition to what’s inherent in many modules.

How We Perceive It

How we perceive that delay depends on what you are doing, and the resulting sound that is generated. If you are playing a keyboard, once the delay gets to around 10 ms or over, you start to perceive it as slugging keyboard response; if you are playing a pad to trigger a percussive sound, you will probably notice it even sooner.

When layering two sounds together, many discuss these delays in terms of the “precedence effect” which determines whether we hear the two sounds as an echo, or fused into one complex sound. This threshold can be under 5 ms for percussive sounds, and over 40 ms for more complex, slower-attack sounds.

However, even if we hear two sounds – one slightly delayed – merged together into one complex sound, the timbre or character of that sound changes depending on the length of that delay. This can get into the area of “feel” – which may seem like a squishy concept, but is very real.

The Patch Can Make a Difference

A digital module that relies on a trigger input – such as the WMD Crater mentioned above, Mutable Instruments Rings, a sample player, etc. – should hold off on playing a new sound until it’s completely ready, resulting in a ~1.5 to 7 ms delay among the modules I’ve measured in my own “gigging” case. This isn’t unique to modules; pre-patched synthesizers also hold off on outputting a new sound until they are ready. Some refer to this as “MIDI delay” when in fact, a MIDI note is transmitted in under a millisecond; the bigger delay is in the instrument detecting that note and responding to it. (I’ll be writing an Industry Stories post for Patreon soon about how much trouble I got into with manufacturers by reporting on this back in the 80s.)

The results are far different if you’re patching a voice that mixes analog and digital modules. For example, take a patch that uses a digital oscillator, but an analog VCA and envelope generator. In theory, a new pitch CV should be sent to the oscillator at exactly the same time as the trigger to the envelope generator. And an analog envelope and VCA will respond to those new note triggers pretty much immediately.

However, the digital oscillator may take a few milliseconds to change its frequency in response to the new pitch CV at its input – and the envelope+VCA may already be open while this is taking place. As a result, you might hear a very brief slide or slur in the pitch at the start of each new note, particularly at higher frequencies where several cycles of a wave can happen inside a few milliseconds. I also have a plucked-sound digital module that does not delay its output long enough after the trigger for the incoming pitch CV to settle down; it too can display a short slur at the start of notes.

Workarounds and Cures

There are several things users and manufacturers can do to reduce these delays – or at least their audible effects.

For one, if you’re noticing a pitch slur at the start of new notes, and there’s a digital module somewhere in audio portion of the signal chain, you can insert a trigger delay module in front of the envelope generator that’s driving the VCA, and carefully dial in a tiny amount of delay until you don’t hear the problem anymore. There’s even a chance that switching to a digital envelope generator might introduce enough delay on its own, compared to an analog one. The Rossum Panharmonium mentioned above even has a separate Slice Clock Out trigger that takes into account its internal processing delay; I demonstrate it in this portion of a video I created on the Panharmonium.

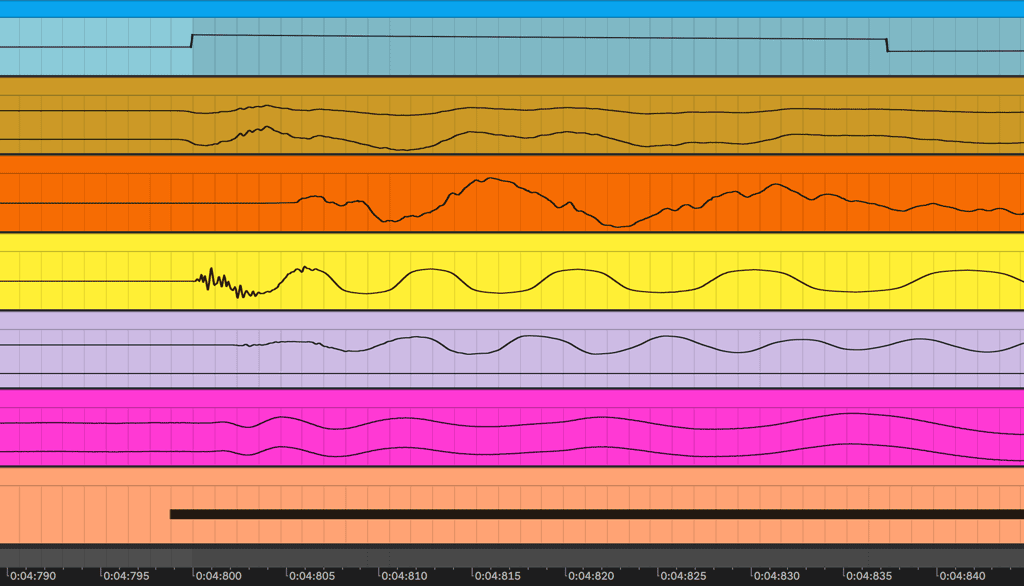

Second, some digital audio workstations can help you reverse the effects of these various delays. Ableton Live, for example, can change the timing of MIDI clocks it sends to each independent device it can identify, and you can set this number for each device. In my own performance system, I have Live send clocks about 62 ms early to the Five12 Vector sequencer, which takes into account all of the delays involved in sending the MIDI clocks out and through a hub, through the Vector and onto the modules, and in the audio path from the modular back into Live. Live also allows you set the “track delay” for each individual audio or MIDI track in a project; I have mine set to pull the output of Rings forward 2 ms, the output of an older Disting in sample player mode forward by 6 ms, et cetera. (I just wrote a detailed post for +5v and above Patreon subscribers going into how I manage latency in my own system.)

Third, manufacturers could put a higher priority on reducing the latency in their digital modules. Sometimes this requires a hardware upgrade with a faster processor and scanning circuitry. To single out one case for praise, the Expert Sleepers Disting EX plays samples twice as fast as its predecessors. Other fixes can be done just with software updates: For example, after I brought these timing issues to their attention, 1010music cut the delay between clocks in and playing synchronized, looped samples out in their Bitbox and Blackbox by half.

I would also like to see more sequencer and trigger pattern generator modules include internal and preferably user-adjustable offsets for when they send out new note events. I know of at least one sequencer that sends triggers slightly after pitch CVs for a new sequence step, knowing some modules require a little time for changes at their pitch input to settle down. But they could go even further than that.

For example: Back in the 80s, Matt Isaacson and I designed the Sequential Studio 440 firmware to cue up notes one clock cycle early. Since they were already buffered up and waiting for the next clock before they went out, this allowed us to include a user parameter to send notes to each of its two MIDI outputs up to 15 ms earlier or later than the clock cycle they were associated with. The user could put their slower instruments on one of the two MIDI outputs, and adjust that one to send their notes out early.

This was done to compensate for Sequential’s own Prophet 2000 sampler, which took 8-15 ms from MIDI in to sound out, and which was slower than some of our earlier synths as well as the 440 itself. It terms of more modern gear, imagine a drum pattern controller that allowed you to slide each track earlier or later in time, both to deal with latency but also to alter the feel of each instrument in the groove. If we could figure out how to program this in assembly language with a lowly 8 MHz processor in the 80s, modern sequencer manufacturers should certainly be able to do it with their much faster microcontrollers and higher level programming languages today.

Raising Your Game

If you’re at the point in your modular journey where you’re thrilled just to be making your own sounds and performing or recording the occasional piece, all of the above may sound like trying to play three dimensional chess when good old-fashioned checkers is working just fine for you. And I tell users all the time that if they’re happy with what they have already, then don’t worry. But when you get to the point in your journey where you’re wanting to raise your game, and increase the polish on your recordings and live sets to include subtle things like “feel” that will make you come off as being more professional, timing is one of the things you should be thinking about.

Alias Zone Updates

I have two (maybe three?) more performances planned before a summer break. At that point, I go into album mode, editing, overdubbing, remixing, and mastering live and studio performances from the past several months into a few new album releases. The playlist linked to above is the tentative running order for the next album, showing the original live-in-the-studio performances.

Before then, I’ve sent a couple of “singles” off for mastering. One is Compassion: my performance at the SoCal Synth Society’s NAMMless Jamuary; that one will appear on the Alias Zone Bandcamp page in the upcoming weeks, and will be the basis for my performance in June at the Currents New Media Festival – but reworked in quad. (An alternate version is also slated for a future album release.)

The other is Devotion, which is a “lost track” from my performance at SoundQuest Fest last year and the associated album, We Only Came to Dream. I am currently deciding whether to include it as a track with We Only Came to Dream, or to make it part of a new album in the future alongside Compassion and the new piece I’m creating for my performance in May at Mountain Skies: The Barefoot Path. I released a performance video of a version of this piece last year; it is linked to here.

Also, I have a track on the recently released Drones for Peace fundraiser for Ukraine. More on that project below.

Learning Modular Updates

In addition to continuing to create more closed captions for my original Learning Modular Synthesis course, I’ve released my performance at SynthFest France on the Learning Modular YouTube page. That’s the link above.

(By the way, my new performances always appear first on the Alias Zone YouTube channel – won’t you please subscribe, so you can see them earlier, and so that I can eventually kill the ads YouTube runs with them? To do that, I need to reach 1000 subscribers, and 4000 hours of viewing in one year. The Learning Modular channel is already well past that, and ads are turned off for virtually every video on that channel; I would like to do the same for the Alias Zone channel.)

Patreon Updates

April saw three new loooong posts for my +5v and above Patreon subscribers:

- My observations on how Steve Roach approaches both his studio work and live performances, based on spending a week with him in February playing at the Phoenix Synth Fest and then working with him at his studio.

- A detailed breakdown of the trigger pattern generation and modification modules in my new performance system, including a survey of my favorite techniques and how I patch them.

- An even longer breakdown of the note generation part of my live system, including detailed explanations of how I distribute clocking in the system, and how I deal with issues of computer and module latency (think of it as the extended or “master class” version of the main article above).

Upcoming Events

May 6-8: Mountain Skies (White Horse Black Mountain, near Asheville, North Carolina)

I will be performing a live set on Friday May 6, toward the end of the noon to 3PM EDT block. There will be up to 30 acts those two days (I have a list in this public Patreon post), plus a less formal hang and jam on Sunday the 8th. Click here to purchase either in-person or streaming tickets. I’ll be hanging around Black Mountain and Asheville before and after the concert so I can visit the Moogseum, take the public Moog factory tour, and meet with a few people.

June 24: Currents New Media Festival (Center for Contemporary Arts, Santa Fe, New Mexico)

I – along with James Coker (Meridian Alpha), Sine Mountain (David Soto), and Jill Fraser – will be performing in quad during a special electronic music night during the festival. There will be no live stream of this event, and seating is limited, so plan on getting to the Center for Contemporary Arts earlier in the day to enjoy the Currents festival, and then get in line for admission to the concert afterwards. Here is a link to all of the performances happening during the festival. Tickets are pay-what-you-can, with a recommended donation of $10; all proceeds go directly to the artists.

July: Denver, Colorado?

There are rumblings of a possible gig in Denver the first weekend of July. I’ll keep you posted as I learn more.

One More Thing…

I’m very proud to be part of the Drones for Peace project by the Colorado Modular Synth Society. It is a fundraiser to help those affected by the war in Ukraine, with all sales donated to humanitarian groups working to help the people of Ukraine. The first volume is already out on Bandcamp; a second one is scheduled to be released May 6.

If you’re thinking “drones…as in a single, dark, never-changing note or sustained sound? I think I’d rather watch paint dry – thank you” … then you are in for a big surprise. Instead, I believe you will be pleased and fascinated by the wide variety of evolving, story-telling soundscapes the musicians involved came up with, using different instrumentation including voice.

My own track – One Day, The Sun Will Rise Again – came from the sessions I recorded before and after my extended ambient performance at Currents 826 Gallery in Santa Fe in March (which was the main subject of last month’s newsletter). I am looking at releasing an album later this year curated from those recordings. But in the meantime, enjoy my track on Drones for Peace Volume 1, as well as the rest of the collection.

still keeping busy; looking forward to some studio time –

Chris

Great discussion on issues with digital vs analog modules for latency issues, Chris. I use a Ladik trigger delay module in my setup to help mitigate these issues.